Creating an Internal Developer Platform for ML teams from the ground up (Part 0)

Introduction

Choosing a topic for the dissertation that was going to validate my master's degree was no mean feat, maybe harder than the actual realization of it. We, as a team of 3 students, tried to come up with different ideas for an interesting project ranging from Kubernetes chaos engineering to edge-computing leveraging GSLB (Global Server Load Balancing) but could never decide on one.

The project had to match our two main constraints:

- It had to be interesting technically. While many students of our promotion chose to go down the "easy technical, hard oral-waffling" path, we decided that we may as-well use this opportunity to expand our technical knowledge and try something we never have.

- It had to be do-able in only a few months. As always we waited for the last moment to start working on our school chores, so we were limited in time.

While working on a PoC for a cloud development environment using Coder, a friend introduced me to Backstage: Spotify's developer portal solution. Right then, we knew we’d found our next project: building a fully self-service developer platform from scratch.

We landed on this project for a few key reasons:

- The goals were easy to define, we knew exactly where to start, and, crucially, where to finish.

- It demanded a broad skill set: programming basics, DevOps, networking, storage, SRE, Kubernetes, Linux—the works.

- Explaining the platform’s advantages to non-technical stakeholders was straightforward; we could clearly outline the pain points for developers and show how our platform would resolve them.

Objectives

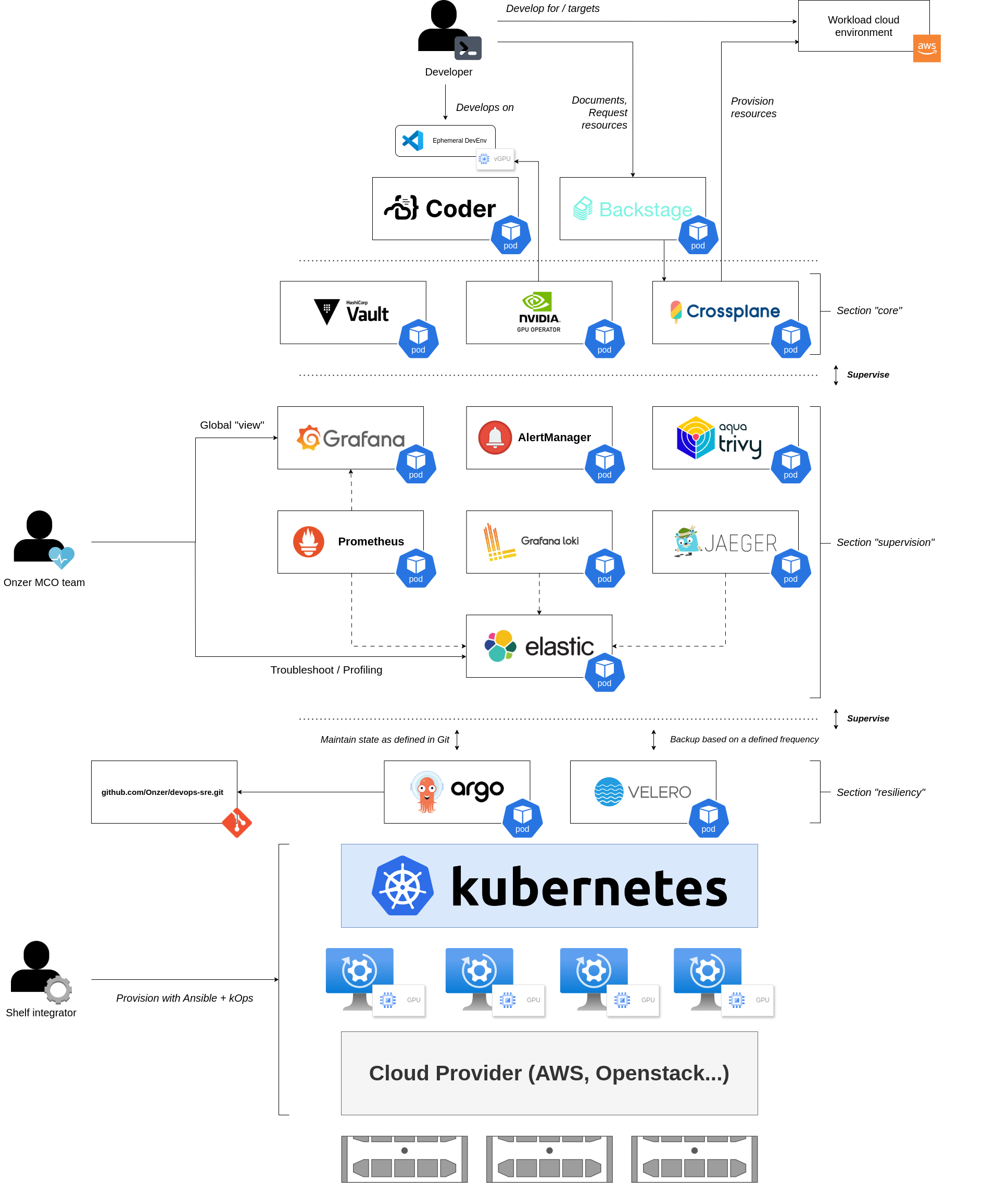

The final platform had to hit a few key marks:

- Fully-featured: from monitoring to security, it needed to be a complete, turn-key deployment solution.

- Deployed via IaC (Ansible + Terraform): we wanted the ability to rebuild the platform from scratch and reach an operational state in under an hour.

- Built around developer needs, focusing on our two main solutions: Coder and Backstage.

- Tailored for ML/HPC teams, meaning each dev environment needed GPU access, virtualized with NVIDIA's GPU Operator.

- Hosted at home! Our school conveniently 'forgot' to budget for cloud resources, so we’re using our OpenStack instances. Luckily, we already had them set up, mine even includes a GPU with a patched driver for vGPU (more on that in future posts).

With that, here’s the high-level architecture we devised based on our collective enterprise experience:

Conclusion

In the next part, we’ll dive into setting up the infrastructure for hosting our platform. Each part of this series is designed to equip you with everything you need to replicate this setup, whether at home or on the job. Take whatever you find useful, and don’t hesitate to reach out if you have any questions—I’m more than happy to help.